点击阅读英文原文

是时候思考了

科技巨头是否实现了其所谓的利他主义理想?谷歌不成文的座右铭“不作恶”(don’t be evil)暗示了,哪怕谷歌在许多领域采取了欺骗性和不道德的做法(尤其是面部识别软件,据称这一软件的开发过程利用了无家可归的有色人种),它的意图始终是善意的。

比尔·盖茨(Bill Gates)现在执掌着世界上最大的私人慈善结构之一,但他创立的微软公司(Microsoft)在遵守一些国家审查制度方面态度开放。苹果(Apple)为救灾提供了数百万美元的财政援助,但在工作条件方面有过糟糕的记录。亚马逊(Amazon)员工的工作状况也受到了严格审查,尤其是在疫情暴发以及最近亚马逊在美国的一个巨型仓库被龙卷风摧毁之后。至于Facebook(现在的Meta)的双手则沾满了鲜血,因为它未能阻止其平台被用来煽动针对缅甸罗兴亚少数民族的暴力。

但是,不管5大公司怎么说,计算机技术从来都不是为了更多人的福祉。一直以来,它都在关注如何更大程度地榨取生产力。从人类磨出燧石的那一刻起,技术就已经成为了一种提高效率的方法;而如今,提高效率是为了盈利。在数字计算机出现之前,我们曾使用人形计算器。人们被雇佣来手工计算以及重复计算;这些人通常是女性,因为这被认为是一种地位较低的角色。这么做的目的是为了让生产最大化、资本化、并保持市场力量。

THE Campus views: What can universities learn from Amazon?

但还不止于此。科技巨头的发展远超行业的早期预期。机器学习的兴起,以及推动机器学习的以用户为中心的大量数据获取,意味着硅谷企业对我们的生活有着深远的影响。他们拥有巨大的能量,如果我们想要享受数字社会的便利,就不能完全避免这一点。这是一个权衡。我们的社会靠相互联系和无限的信息获取而繁荣;有时这是必要的。想象一下没有网络空间的疫情时代是什么样的。想象一下不使用手机度过一天是什么样的。

目前,人工智能的主要发展来自那些拥有资金、人才和数据的国家。世界上最强大的国家——美国和中国——也是人工智能超级大国。要实现新的飞跃的初创公司被成熟的公司收购。高校的研究人员被高薪和(或许)更好的工作环境吸引到业界。与大公司的预算相比,基金资助项目的资源是微不足道的。高校真的能对人工智能革命的走向产生很大影响吗?

他们可以 ,但角色已经改变了。高校现在在不同以往的领域内工作,但我们仍可以创新,仍可以培养思想,仍可以提倡利他和有益的技术。我们可以探索数据,推动开放科学。我们可以与业界合作,共同创造和提供建议。我们可以为大型科技公司树立一面镜子,迫使他们审视自己。

在过去几年中,人工智能伦理的学术研究有了显著增长。尽管人工智能伦理本身已经是一个重要的课题,但算法决策的升级,以及这些决策出错时造成的后果将伦理推到了聚光灯下。英国上议院人工智能特别委员会(The UK House of Lords’ Select Committee on Artificial Intelligence)于2018年底发布了一份报告。报告受到了人工智能共同体的认可,并承认道德发展是关键。报告称:“英国在法律、研究、金融服务和公务机构方面的优势意味着,英国有能力帮助规范人工智能的道德发展,也可以在国际舞台上发挥此类作用。”

科技巨头以科学、技术、工程和数学为重心,经常视其他领域为次要,认为几个世纪以来的哲学、艺术、人文和社会科学无关紧要。但他们逐渐了解到参与是必须的。对负责任的技术开发的日益重视意味着业界听取了外部专家的意见。这其中一部分是监管压力,很大程度上来自学术界,但也是源自令人鼓舞的公众意识、参与和行动主义的加强。这并没有阻止谷歌因进行自我批评式的研究而解雇其伦理研究人员蒂姆尼特·吉布鲁(Timnit Gebru)和玛格丽特·米切尔(Margaret Mitchell),但确实迫使谷歌在遭到强烈反对后重新评估自己的做法。

但是高校不应该太沾沾自喜。我们也需要把自己的事情处理好。在与业界合作时要判断前后信息。许多高校接受科技公司的资助,而资金影响判断的情况也并非没有先例。在接受杰弗里·爱泼斯坦(effrey Epstein)被定罪为性犯罪者后,麻省理工学院(Massachusetts Institute of Technology)仍接受了他的捐款,随即得到了惨痛的教训。

缓慢而深沉的学术实践常常受到快速发展的科技世界的嘲笑,但这些学术实践提供了空间,让人们得以反思新技术是如何开发、部署和使用的。我们是以目标为中心的商业世界的解毒剂。

话虽如此,我们也不能过于缓慢。我们也是自己生产力实践的肇事者和受害者。如果我们花几个月到几年的时间等待一篇期刊文章发表,就错过了机会。我们必须重新思考自己作出回应和互动的方式。在科技巨头方面,我们可以用新的方式作出贡献,但承担不起掉队的后果。

凯特·德弗林(Kate Devlin)是伦敦国王学院(King’s College London)人工智能与社会专业的准教授。

保留好的公司

对人类来说,何时将是终点?我们会不会像恐龙一样,终结于一颗从天而降的巨大陨石?或者像我们在冷战期间认为的那样,发生灾难性的热核爆炸?还是一场比新冠疫情致命许多倍的全球性大流行病?

澳大利亚哲学家托比·奥德(Toby Ord)在2020年出版的《悬崖:人类生存风险喝未来》(The Precipice: Existential Risk and the Future of Humanity)一书中指出,事实上,最大的威胁来自一个与人类价值观极不相符的超级人工智能。他还大胆地(不知这个词是否准确)估计,下个世纪出现这种结局的可能性为10%。

我是一个毕生致力于人工智能开发的人,你可能认为这样的担忧会让我停下来思考。但事实并非如此。晚上睡觉时,我反而想在梦里见到广义上的科学以及人工智能所给出的所有承诺。

我们可能需要花几十年,甚至几个世纪的时间,才能制造出与人类智商相匹配的人工智能。但是,一旦我们能做到这一点,要是还认为机器无法快速超越人的智商就是自负的想法了。毕竟,机器比起人类有很多天然优势。计算机能以电子速度工作,这远远超过了生物速度。它们的记忆力要强得多,能吸收的数据集比人眼所能看到的要大得多,而且永远不会忘记一个数字。事实上,在下棋、分析X光片或将普通话翻译成英语等小领域,计算机已经超过人类了。

更普遍的人工超级智能仍然是一个遥远的前景,不过这一事实并不是我不担心其可能对人类生存造成的影响的原因。我并不担心,是因为我们已经有了这样的机器,它拥有比任何一个个体都要强大得多的智力、能力和资源。这台机器就是公司。

没有人能够独自设计和制造一个现代微处理器,但因特尔(Intel)可以。没有人能够独自设计和建造核电站,但通用电气(General Electric)可以。在未来很长一段时间里,这种集成式超级智能很可能超过机械化超级智能的能力。

当然,价值观差异的问题仍然存在。事实上,这似乎是现下公司所面临的主要问题。组成公司的个体——员工、董事会和股东——可能是有道德和负责任的,但它们共同努力之下所产生的结果可能并不是。例如,仅仅100家公司造成了绝大部分的温室气体排放。关于技术公司没能履行企业公民职责的近期事例,够我写一整本书了。事实上,我刚写了这样一本书。

以Facebook的新闻推送算法为例。在软件层面,这是一个算法与公众利益失调的例子。Facebook只是试图提升用户粘性。当然,用户参与度是很难衡量的,所以Facebook决定扩大点击量。这造成了许多问题:信息茧房,假新闻,标题党,政治极端主义,甚至种族灭绝。

这在公司层面上标记出一个价值校准问题。Facebook怎么会认为点击量是整体目标呢?2020年9月,曾在2006年至2010年担任Facebook商业化运营负责人的蒂姆·肯德尔(Tim Kendall)告诉国会委员会,公司“试图吸引尽可能多的关注……我们借鉴了大烟草公司的做法,在一开始就致力于让我们的产品具有成瘾性,我们最初将用户粘性作为用户利益的代表。但我们也开始意识到,用户粘性也可能意味着,他们无法出于自己的最佳长期利益离开平台……我们开始看到现实生活中的后果,但这些后果没有得到太多重视。用户参与度总是最重要的,这一点大过其他所有。”

人们很容易忘记,企业完全是在工业革命期间才出现的人造机构。它们提供了构建新技术所需的规模和协作。有限责任让公司高管可以在不招致个人债务的情况下,承担新产品和新市场的风险。进入现代后,发行新产品和进入新市场通常是由多种风险投资及传统的债券和股票市场提供资金。

大多数上市公司都是最近才出现的,其中许多很快就会被技术变革所淘汰。据预测,目前标准普尔500指数中3/4的公司将在未来10年内消失。大约20年前,全球市值最高的10家中,只有4家是科技公司:工业巨头通用电气位居榜首,其次是思科系统(Cisco Systems)、埃克森美孚(Exxon Mobil)、辉瑞(Pifzer)和微软。如今,前10名中有8家是数字技术公司,以苹果、微软,以及谷歌的母公司Alphabet为首。

那么,也许是时候思考一下,我们应该如何重塑企业,以更好地适应正在进行的数字革命。如何确保企业更好地与公众利益相结合?如何更公平地分享创新的成果?

这就是让我夜不能寐的超级智能价值校准问题。

而这正是学术界要发挥重要作用的地方。我们需要各领域学者帮助想象和设计这个未来。经济学家设计新的市场。律师起草新的法规。哲学家应对许多伦理挑战。社会学家确保人类处于社会结构的中心。历史学家要从过去的工业变革中汲取教训。我们还需要来自科学和人文领域的许多学者确保机器和企业中的超级智能会创造一个更美好的世界。

托比·沃尔什(Toby Walsh)是澳大利亚研究中心(ARC)的桂冠研究院(Laureate Fellow),新南威尔士大学悉尼分校(UNSW Sydney)和澳大利亚科学与工业研究组织数据中心(CSIRO Data61)的人工智能科学教授。他的最新著作《2062:人工智能创造的世界》(2062: The World that AI Made)探讨了当机器与人类智能相匹配时,世界会是什么样子。他的下一本书《机器行为恶劣:人工智能的道德》(Machines Behaving Badly: The Morality of AI)将于5月出版。

推动责任感

1995年,我和特里·拜纳姆(Terry Bynum)为泰晤士高等教育写了一篇专题文章,其中提出这样的问题:“当大多数人类活动都在家中的网络上进行时,人际关系和社群将有什么变化?当数百个国家被纳入全球网络时,哪个国家的法律将适用于网络空间?穷人是否会因为负担不起全球信息网络的连接而被剥夺权利——剥夺工作机会、教育、娱乐、医疗保健、购物和投票的机会?”25年过去了,我仍在询问这些问题。

到目前为止,全球对数字技术有着根深蒂固的依赖。加拿大政府于2021年1月发布的报告《加拿大及其他地区负责任的创新:理解和改善技术的社会影响》呼吁采取“包容和公正、消除不平等、分享积极成果、允许客户代理”的解决方案。我同意。为此,我们需要更加重视我们现在所称的数字伦理,即数字技术和人类价值观的结合,以确保促进数字技术发展,而非损害人类价值观。

最近的两个伦理热点问题强化了这种需求。其中之一是,据估计有3.6亿65岁以上的的老年人目前处在数字鸿沟“错误”的一边;由于世界在疫情中更加依赖数字技术,这些人面临着更大的风险。另一个例子是,700多名英国邮政分局长因错误的数字会计系统而被指控盗窃。未经证实的数字取证是定罪的唯一证据——其中许多定罪现在已经被推翻。

只有善良的行为才能促生一个有道德的数字时代。规则可能会提供一些指导,但它们是一把双刃剑。在行业和政府内部,服从文化已经根深蒂固,扼杀了所有对技术相关的复杂社会伦理问题进行对话和分析的机会。组织化的孤立心态也必须被包容和同理心所取代。

我们可以通过3种不同方式促进真正的善意行为。自上而下的驱动方式常是由权威机构强制要求的,这些程序为了实现某个总体目标,规定资源应该如何分配。中间突破的驱动方式包括授权组织内的所有人提出新想法,发起改变并支持改变。自下而上的驱动方式通常来自草根集体行动,一般是由公民领导的。

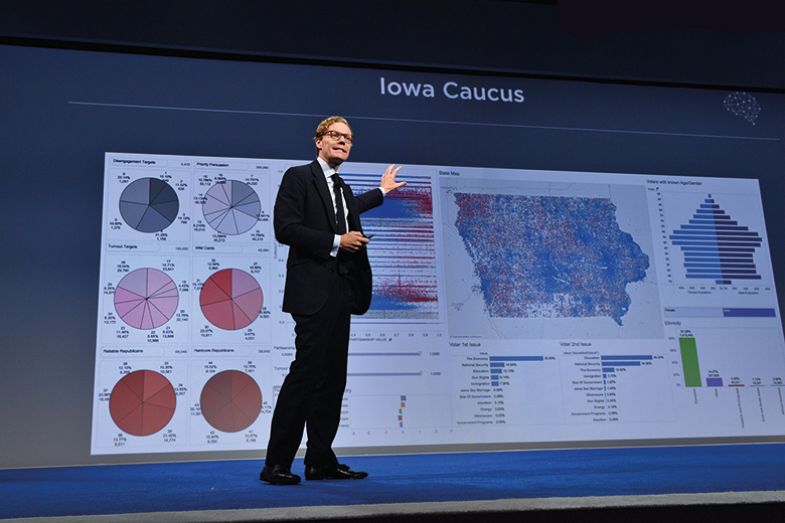

告密是一种自下而上的驱动因素,并已经成功地用于揭露主要与个人数据有关的不道德行为,有时还能帮助纠正这些行为。2013年,爱德华·斯诺登(Edward Snowden)泄露了美国国家安全局的高度机密信息,揭露了该机构与电信公司和欧洲各国政府合作展开的众多全球监控项目。2018年,克里斯托弗·怀利(Christopher Wylie)透露称为特朗普竞选团队工作的政治咨询公司剑桥分析(Cambridge Analytica)非法获取了约8700万人的Facebook信息,并利用这些信息建立选民的心理档案,然后在社交媒体上传播叙事,引发一场文化战争,压制黑人投票率,加剧种族主义观点。2021年,弗朗西斯·豪根(Frances Haugen)向美国证券交易委员会和《华尔街日报》(The Wall Street Journal)披露了数千份Facebook内部文件。这导致了针对Facebook的一系列调查,包括更新平台驱动算法、对年轻人 的影响、对知名用户的豁免和对错误信息与虚假信息的回应。

有些伦理热点可能是显而易见的,有些则不是,但所有问题都必须得到解决。这只能通过有效的数字道德教育和针对意识培训的项目实现——这些项目在工作范围内外促进积极主动的个人社会责任,同时兼顾全球共同价值观和当地文化差异。讨论、对话、讲述故事、案例分析、指导和咨询都是有用的技巧。

因此,我们前进的方向应该是在初等教育、中等教育 、继续教育、高等教育和其他教育类别中建立一个中间突破的、跨学科、终身学习的伙伴关系。高校扮演着关键的角色。虽然有些学校把数字伦理引入了与技术相关的学位学习中,但还有学校没有这么做,而且现有的大部分课程都是选修课。这种情况必须改变。在包括技术和工程、商业和管理、科学、金融和法律在内的许多学科的本科和研究生课程中,相关数字伦理课程都应该是必修课。

培养实践智慧,发展个人的信心和技能,让学生以负责和合乎道德的方式行事,能够在毕业生进入职场时提高他们的的意识和责任感。然而,想要产生持久的影响,高校必须在政治、商业、产业、专业和国际层面参与进来,以影响学界以外的人的战略和态度。他们必须开发和推动一个新的数字未来愿景,这个愿景在理论上是有基础的,但如果业界和政府愿意接受这一愿景,它也是有实用性的。

我们多少都必须接受并适应自己是技术专家这一事实。我们教育后代的方式必须反映这一变化,以确保数字时代对每个人都有好处,也对整个世界有好处。

西蒙·罗杰森(Simon Rogerson)是德蒙福特大学(De Montfort University)名誉教授。他参与创立了计算机与社会责任中心、国际计算机伦理会议(ETHICOMP)以及《信息、沟通与社会伦理》杂志(Journal of Information, Communication and Ethics in Society)。他是欧洲第一位计算机伦理学教授。他的最新著作是《符合道德的数字技术的演进》(The Evolving Landscape of Ethical Digital Technology)。

引领这一领域

科技巨头带来的最大的危险,不亚于为了提高效率而丧失人类自主权。

即使影响一个人的决定是由另一个人类(比如我们的家人、政府或雇主)做出的,我们也明白这些决策者和我们之间的权力关系,我们可以选择服从或抗议。然而,如果电脑在一个我们无法逃脱的系统中说“不”,抗议就变得毫无意义,因为这不具备“社会”要素。

此外,科技巨头剥夺了作为我们智慧重要部分的核心竞争力,并最终会剥夺我们的文化。以看地图和定位为例。虽然依赖谷歌地图可能既有效又方便,但习惯性使用这一软件意味着我们失去决定从A地点开车去B地点的路线的能力。更重要的是,我们失去了导航和理解自己所处的地理环境的能力,变得越发依赖技术为我们做到这一点。

许多人可能并不关心这种便利与能力之间的权衡。其他人甚至可能几乎不会注意到这一点。但技术导致的决策及其他能力的丧失是普遍存在的。关于自主性的一个更令人担忧的例子是,当我们浏览万维网和参与社交媒体时,通过跟踪技术实现的分析。关于我们偏好和兴趣的数据集合是包罗万象的;它不仅包括我们的购物选择,还包括我们的审美、宗教或政治信仰,以及有关健康或性倾向的敏感数据。分析可以对我们的消费进行微观定位——这包括新闻结果,并会导致人们普遍所抱怨的所谓信息茧房或回音室现象。

这些算法为我们提供的内容显然是我们渴望的,或者至少是我们认同的,但算法把我们分成了群体,而我们无法从中逃脱。剖析剥夺了我们决定访问那些内容的自主权,更广泛地说,观点的两极分化和广告的微观目标直接影响了民主,正如Facebook与剑桥分析公司的丑闻所显示的那样。

此外,虽然从大数据中提取的知识有许多经济、社会和医疗效益,但也有许多例子表明,更多的知识是有害的。例如,让我们想象一款“免费的”在线游戏,它可以对个人患早发性痴呆的可能性进行分析。了解患病的风险状况可能会影响人们的心理健康。与公司分享信息可能会导致一些人在求职、信用或保险上受到拒绝。它将破坏整个健康保险行业目前所预测的风险扩散。

最后,令人不安的不仅是科技巨头对我们做了什么,还有它的绝对力量。从本质上讲,科技巨头的基础是连接我们的网络。一个网络连接的人越多,就变得越有用,这不可避免地会导致垄断。电子商务、在线拍卖、搜索引擎和社交媒体都是如此。

此外,科技巨头的规模和实力使他们能在研发方面投入大量资金,这意味着它们总是在创新的游戏中走在前面。这使得政府或公共资助的机构(如高校)难以跟上。反过来,这意味着研究和创新议程的目标是让科技巨头变得更富有,甚至更强大。

这种循环制造了一种心态,认为通过大数据、数据挖掘和人工智能进行的无限制创新势在必行,不理会伦理,也不在乎对人类会造成什么影响:这是人类发展中不可避免的下一个进化步骤。高校应该通过其教学和研究的核心使命来质疑这一必然性。他们必须保护自己不受政治和商业利益的影响。

特别是,高校应该鼓励真正的跨学科研究,在资助和项目领导等方面,将所有学科作为平等的合作伙伴整合起来。例如,英国研究与创新署(UK Research and Innovation)在人工智能和自主系统领域的大部分资金都被用于资助计算机科学和工程项目。这是错误的,这几乎没有为伦理学家、律师、人文和社会科学研究人员留下机会去批判性地反思什么才是对人类的整体进步有益的。

高校应该引领有关科技巨头的对话,并促成学科和思维模式之间的交叉融合。毕竟,你如何定义科技巨头带来的挑战,取决于你对他们的看法。因此,计算机科学和工程的教学也应该包含伦理、法律(如数据保护法)、社会科学和人文学科的元素。

当然,无论公共资源如何分配,与科技巨头所能获得的资源相比,总是相形见绌。因此,有些人认为高校研究/教学与科技巨头的创新之间的权力关系,类似于拿着玩具枪的人和哥斯拉之间的关系。但有时,谁会胜出这个问题不仅仅是由技术和金钱所赋予的权力决定的。同样重要的是,要考虑谁能影响人类的心态——而这正是高校在这场游戏中利害攸关的地方。

朱莉亚·霍内勒(Julia Hörnle)是伦敦玛丽女王大学(Queen Mary University of London)商法研究中心的互联网法学教授。

本文由陆子惠为泰晤士高等教育翻译。