Who do you think wrote the following? “Globally, stocks delivered positive absolute returns in the second quarter. Those positive returns followed a broader trend of economic strength as two of the fund’s key indicators, MSCI World Index and China PMI, trended positively in the same period.”

The text reads like the kind of financial report an eager young graduate in a City or Wall Street firm might write. Although the topic would be unlikely to quicken their pulse, they might well count themselves lucky to have landed a graduate-level job at all. In 2013, the UK’s Office for National Statistics calculated that nearly half of recent graduates in employment had jobs for which a degree was not traditionally required.

But, as you may already have twigged, the author of the above passage was not human. It was produced by Narrative Science, a Chicago-based company whose software also writes news stories about company earnings for Forbes, automating what may once have been a job for a young financial journalist.

When university leaders reflect on the future for their institutions and their sector, they often neglect the threats posed by artificial intelligence. When Times Higher Education recently asked sector leaders for their predictions of what universities would look like in 2030, there was scant mention of the impact of technology except in so far as it directly affects pedagogy, via innovations such as massive open online courses (“Future perfect: what will universities look like in 2030?”, Features, 24 December 2015). Only a retired computer scientist predicted that automation would rob universities of students to teach.

But the torrent of articles and books published in recent years shows that humans are once again worried that robots are about to displace them in the workplace – and that could have profound effects on universities. “Robots: friend or foe?” asked the Financial Times in a series of articles on advances in automation in May. In one, a journalist and an algorithm called “Emma” were both set the task of writing a story about employment data. Reassuringly for the journalist, while Emma was much faster, she missed crucial newsworthy points and used some odd phrases. But it would be a mistake for academics to think that machines will sweep away all the low-skilled, non-graduate jobs before they start nibbling into the more complex professional roles they envisage for their students.

According to Martin Ford, a Silicon Valley entrepreneur whose 2015 book The Rise of the Robots makes grim reading for white-collar workers, it is “becoming clear that smart software automation applications are rapidly climbing the skills ladder and threatening jobs taken by university graduates”. Even whizz-kids with plum jobs in financial services are not safe. In an article titled “The Robots are Coming for Wall Street”, The New York Times reported in February on software written by a company called Kensho that can automatically predict how markets will move in response to different types of world events, such as an escalation in the Syrian civil war. It can generate in minutes a report that ‘‘would have taken days, probably 40 man-hours, from people who were making an average of $350,000 (£266,000) to $500,000 a year”, Daniel Nadler, founder of Kensho, told the newspaper. Within a decade, a third to a half of finance employees will lose their jobs to this kind of software, he predicted. Meanwhile, last November, Anthony Jenkins, former chief executive of Barclays, predicted a similar swathe of job losses across banking because of the rise of new technology.

The legal field is also being shaken up, Ford tells THE. The consultancy Deloitte has predicted that 100,000 legal jobs in the UK alone could be automated over the next 20 years. A company called ROSS Intelligence, for instance, is offering law firms a tool that automatically reads legal queries and returns the most relevant answers from the mountain of case law that lawyers normally have to wade through manually.

One of the reasons financial analysis is proving unexpectedly easy to automate is that what analysts do, while highly specialised, is in some ways very predictable. “Could another person learn to do your job by studying a detailed record of everything you’ve done in the past?” Ford asks in The Rise of the Robots. “If so, then there’s a good chance that an algorithm may someday be able to learn to do much, or all, of your job.” Of course, universities argue that higher education is about more than getting a good job. But for 17-year-olds deciding whether to take on large debts to do a degree, better employment and salary prospects are key considerations. If large, high-quality employers such as banks and law firms drop out of university milk rounds, average graduate earnings will inevitably be less of a draw.

The good news for universities and academics is that there are still skills that observers believe machines will struggle to master – although they are unsure for how long. The most obvious one is creativity. “As we look across examples of things we haven’t seen computers do yet, this idea of the ‘new idea’ keeps recurring,” write Massachusetts Institute of Technology academics Erik Brynjolfsson and Andrew McAfee in their 2014 book The Second Machine Age. “We’ve never seen a truly creative machine, or an entrepreneurial one, or an innovative one.”

But what kind of creativity is likely to remain uniquely human? You might, for instance, regard designing a radically new bicycle stem (the bit that holds the handlebars to the frame) as a straightforwardly creative act. If it is, then creative machines may already be among us: a software package called Dreamcatcher takes specifications for new components and models thousands of possibilities with different materials until it comes up with the optimum design. That solution may be radically novel: one of the stems it invented, for instance, consists of an exoskeleton-like hollow lattice.

Indeed, Brynjolfsson and McAfee themselves point out that what is defined as “routine” and not particularly creative keeps changing as machines master new tasks. Computers have long been able to beat humans at chess, and from a computing point of view, the game is seen as relatively simple – machines just use brute processing power to calculate the best move. But, previously, human grandmasters were seen as geniuses, and chess was seen as “one of the highest expressions of human ability”, they write. In other words, many of the things we might think of as creative are automatable if a machine is given the right parameters.

Machines can work towards specific goals set for them, agrees Dan Schwartz, dean of the Graduate School of Education at Stanford University, which has spawned many of the tech firms now dreaming up ways to make humans obsolete. But it is “hard for them to redefine what the goal should be”, he tells THE.

Academics will be pleased to hear that MIT’s Brynjolfsson thinks that the humble essay is still a good way to train students to come up with these kinds of fresh ideas, since it “forces you to organise your thoughts”. The need to foster creativity also “means doing more unstructured work”, he adds. At MIT, “we do much less rote and repetitive learning. The things people enjoy the most…are the things machines do worst.”

Another trait that experts seem to agree will remain uniquely human for some time is social intelligence. “While algorithms and robots can now reproduce some aspects of human social interaction, the real-time recognition of natural human emotion remains a challenging problem, and the ability to respond intelligently to such inputs is even more difficult,” write University of Oxford academics Carl Frey and Michael Osborne in a widely cited 2013 paper that predicts that nearly half of US jobs will be automatable in the next 20 years.

“What machines cannot do is empathy,” agrees Ian Goldin, director of the Oxford Martin School, a multidisciplinary centre at Oxford that explores large global challenges (the Frey and Osborne paper, “The Future of Employment: How susceptible are jobs to computerisation?”, was part of its programme of research into future technologies). Machines “cannot give what is uniquely human, which is love, compassion and sympathetic reaction”.

Take someone whose house has just burned down and is on the phone to their insurance company, Goldin says. An automated service may be able to adequately process their call. But without an empathetic human on the end of the line, the devastated customer may think about switching insurers. Customers may even be willing to pay more for humans’ “prized” empathetic qualities, he says. And while it is probably fair to say that developing traits such as compassion and emotional intelligence is not the focus of most higher education courses, there is evidence that university graduates are more likely to volunteer, less racist and more trusting.

This human need to connect with other minds might also be good news for those studying performing arts. Computers can already compose music, but, according to Goldin, “we don’t want to go to a concert and listen to a computer all evening. What we want is experience, and we want to share it with other people, not machines.”

Related to social intelligence is the capacity to think about human values. For example, humans will still be needed to make ethical and moral decisions, according to Stanford’s Schwartz. If he were seriously ill in a hospital bed, “I don’t really want a machine to decide I don’t have a chance of life.” For this reason, Schwartz thinks that arts and humanities subjects – which are often dismissed for their lack of obvious utility – may end up having the most longevity. “It has always been the argument for the liberal arts and humanities” that they make students better able to “make decisions about human experience”, he notes.

Goldin has also spoken about the humanities as being “timeless” disciplines because they give graduates the skills to navigate rapid periods of change. Humans have been asking how to form judgements and build value systems since “Aristotle and before”, he says, and will continue to do so. By contrast, Goldin has “no idea how physics will look in 50 years”. However, he stresses that he is not arguing that students should abandon the sciences, whose grappling with fundamental questions will still help to guide graduates over the course of their lives even if the frontiers of knowledge advance.

The third human trait that machines are struggling to emulate is less cerebral: dexterity. One example of a degree-level job that relies heavily on this, according to Ford, is nursing. But his other prominent examples are the “many skilled trade occupations typically shunned by university graduates: plumbers, electricians and the like”.

Although robots are becoming increasingly dexterous – mechanical arms can now sort through piles of cluttered boxes with spooky speed and accuracy – they are still much worse than humans at understanding messy environments and deftly manipulating them. “Would you give your baby to a machine to change their nappy? Unlikely,” says Goldin.

Worryingly for universities, it might turn out to be far more cost-effective for businesses to automate desk-bound “knowledge workers” than those who move things around for a living. This is because a single computer can use its vast processing power to do the job of tens of thousands of human brains. But a robot on a production line can, at most, replace a handful of humans. It cannot be in two places at once.

Also ominous is Brynjolfsson’s confession that while The Second Machine Age cited three uniquely human skills, he now believes that this may be down to two. While creativity and “complex communication” with humans remain, pattern recognition is being mastered by computers with surprising rapidity. They are, for example, becoming adept at taking mountains of data – credit scores, for example – and finding trends, Brynjolfsson says. “Machines can learn pretty well if you have a large quantity of data.”

To help students cope with this blistering pace of change, everyone seems to agree that universities must, above all, instil in students a hunger to continue their education beyond graduation and keep their skill sets as broad as possible. “The worst choice a student could make would be to make a major investment in acquiring skills in some fundamentally routine and predictable occupation,” Ford says.

For Schwartz, universities have to open students’ minds to the “satisfaction” of “understanding deeply”. But, recalling his own physics students’ complaints that his classes were not preparing them for an upcoming test, he thinks that current university courses often reward rote memorisation instead.

There are, of course, numerous sceptics who doubt that increasingly capable machines will leave humans workless. “We’ve always been finding new jobs,” says Stephen Watt, a computer science professor and dean of mathematics at the University of Waterloo in Canada. “My view is that this has been happening for decades, if not centuries, and it will continue for decades and centuries.”

A study carried out by researchers from the Centre for European Economic Research in Mannheim, Germany for the Organisation for Economic Cooperation and Development, published earlier this year, also hits back at Frey and Osborne’s claims that half the US workforce could be automated by the mid-2030s. In their estimation, only 9 per cent of jobs are automatable. They also agree with Watt that new technologies will create new, as yet unimaginable occupations.

Watt is also bullish about the future for universities. He believes that the technological churn will create an unprecedented demand from students to be taught “how to learn”. But Ford takes a much more pessimistic view. Graduates on both sides of the Atlantic are already overqualified and struggle to find jobs that use their skills, he writes in The Rise of the Robots, and this will only get worse as automation progresses. He thinks that the “conventional wisdom” that more investment in education will allow humans to stay ahead of machines amounts to little more than a doomed attempt to funnel ever more disappointed graduates into a shrinking set of “graduate-level” jobs.

For this reason, universities will “have to shift away from the current emphasis on specialised vocational training and instead emphasise the intrinsic value of education, both for the individual and as a public good that benefits everyone”.

Dating back at least as far as John Maynard Keynes, optimists about the machine age have seen it as offering humans a life of leisure, with untold opportunities for personal fulfilment. And it is easy to see how higher education could play a significant role in that. But if automation evaporates the graduate earnings premium and universities come to resemble a cross between a finishing school and a particularly rigorous book club, the question is whether anyone will be willing to foot the bill.

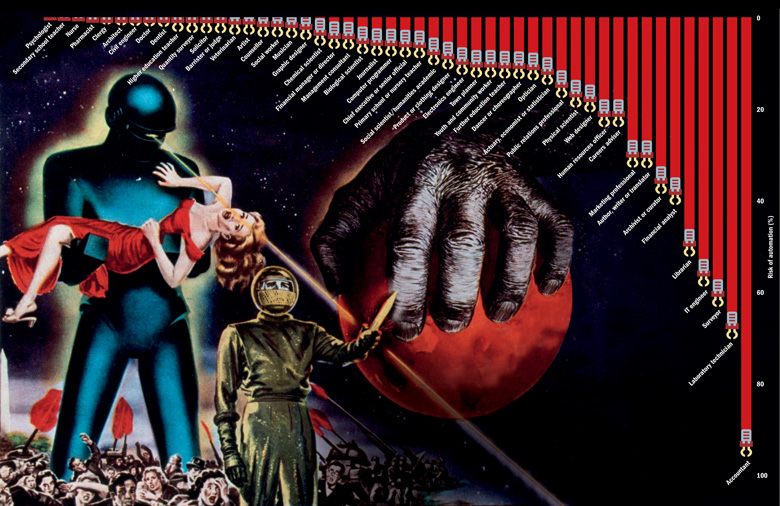

Rise of the machines: which graduate jobs are most automatable?

This list shows a selection of jobs typically done by graduates and sets out how likely they are to be automatable over the next 20 years.

The data are drawn from a 2013 study of the US labour market by Carl Frey and Michael Osborne, academics at the University of Oxford, and adapted for the UK by Deloitte and the BBC.

The ranking is based on how heavily the job relies on the three skills that Frey and Osborne think are likely to remain the preserve of humans: creative intelligence, social intelligence and the perception and manipulation of objects.

It is important to remember that they measure whether a role can be automated, not whether it will be. Businesses may decide that it is not worth the investment, or some professional groups may use their power to block technologies that could replace them.

On the whole, roles typically linked to one specific degree course (such as architect) have a lower risk of automation than jobs open to graduates of many subjects (such as accountant). Nonetheless, students studying to be psychologists, priests or vets – which all have a very low automation risk – should not feel too relaxed because competition for jobs in these areas is likely to increase as humans are forced out of other sectors.

It should also be noted that some observers disagree with the Frey and Osborne rankings. They rate pharmacists as being very safe from automation, but, according to Martin Ford, author of The Rise of the Robots, “much of what pharmacists do is almost ideally suited to automation”. He points to a pharmacy at the University of California Medical Center in San Francisco that is run entirely by robots.

Perhaps, then, it will not be long before a machine comes up with an even more accurate ranking.

Efficiency drive: could researchers be automated?

A clue to the rapidly advancing impact of intelligent machines on scientific research appeared on the front cover of Nature earlier this year. “Machine-learning algorithm mines unreported ‘dark’ reactions to predict successful syntheses,” the journal reported.

The algorithm in question was the work of chemistry and computer science professors at Haverford College in Pennsylvania, who took unpublished data about failed chemical reactions and used it to create an algorithm that proved to be better at predicting reaction results than the team itself.

This is just one of the ways that machines are assisting, if not quite yet replacing, researchers in the lab. One of the most famous tools that scientists now have at their disposal is Eureqa: software produced by a company called Nutonian that takes data and works out a model explaining the results. According to Michael Schmidt, founder of Nutonian, the program (which is free for academics to use) can help in “any situation where people collect a lot of data but have few theories” to explain all the information. “I’ve seen [it used in] everything from cosmology – finding spins on galaxies – to medicine.”

Back in 2009, when the software was launched, it was already able to use a pendulum – releasing it from different positions to see how it swings – to generate data and, from this, to come up with physical laws, including Newton’s Second Law of Motion (relating force to mass and acceleration). Increasingly powerful computers and advances in mathematics have made this kind of number crunching possible, explains Schmidt, and in the past three years, Eureqa has contributed to more than 500 papers across a broad range of disciplines.

Another area in which machines can assist is in simplifying literature reviews. Peter Murray-Rust, a chemist and open data campaigner based at the University of Cambridge, says: “We absolutely have to deal with the flood of information using machines…There are now 10,000 [new] scientific papers per day.” While it is already technologically possible for computers to perform literature reviews, “it is not politically or economically possible” because research papers are still written in what he calls a “19th-century print format”, rather than a semantic, machine-readable format.

The time savings could be enormous. It can take a week for a team of medical scientists to go through previous clinical trials, says Murray-Rust, with each paper being looked at for less than a minute. “Machines can take away about 80 per cent of the work,” he believes, although he cautions that “in a number of cases, humans will [still need] to make judgements about what should be included” in the review.

Another area in which machines already excel is finding patterns in data, Murray-Rust explains. This might sound like low-level statistical grunt work, but it means that they are technically capable of making some of the most famous discoveries in science. “A clear pattern in science is something like the periodic table,” he says. If a machine was fed data about the properties of elements, it could produce “something that has many of the features of the periodic table”.

Nonetheless, it would still take a human to decide whether such a thing was a useful scientific concept, and whether it could be used to make predictions, he points out. And he thinks that coming up with a new concept, such as Darwinian evolution, would be beyond the current capabilities of a machine.

Schmidt admits that the most Eureqa can currently do is iterate variations of existing experiments to get more accurate explanatory models by gathering new data points. His company is working on systems with “computational curiosity” that would be able to come up with wholly new ideas, but that is “probably two to three decades” away.

后记

Print headline: I, graduate