Big data has frequently been used to suggest we are locked into our history, our path dependent on larger structures that arrived before we got here

Historians once told arching stories of scale. From Gibbon, Mommsen and Fustel de Coulanges on the rise and fall of empires to Macaulay and Michelet on the making of modern nations and Mumford and Schlesinger on modern cities, historians dealt with long-term visions of the past over centuries or even millennia.

Nearly 40 years ago, however, this stopped. From about 1975, many (if not most) historians began conducting their studies on much shorter timescales, usually between five and 50 years. This compression can be illustrated bluntly by the average number of years covered by doctoral dissertations in history in the US. In 1900, the period was about 75 years; by 1975, it was closer to 30. Command of archives, total control of a ballooning historiography and an imperative to reconstruct and analyse in ever-finer detail had become the hallmarks of historical professionalism, and grand narratives became increasingly frowned upon.

Two thousand years after Cicero minted the phrase historia magistra vitae – usually translated as “history is life’s teacher” – the ancient aspiration for history to be the guide to public policy had collapsed. As Harvard historian Daniel Lord Smail writes in a 2011 article in French Historical Studies, “the discipline of history, in a peculiar way, ceased to be historical”. At the same time, history departments lay increasingly exposed to new and unsettling challenges: waning enrolments, ever more invasive demands from administrators and their political paymasters to demonstrate “impact” and internal crises of confidence about relevance amid the emergence of new disciplines such as political science, which were enjoying swelling classrooms, greater visibility and more obvious influence in shaping public opinion. So professional historians ceded the task of synthesising historical knowledge to unaccredited writers. Simultaneously, they lost whatever influence they might once have had over policy to colleagues in the social sciences - most spectacularly, to the economists.

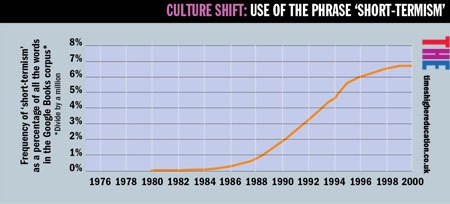

This narrowing of vision reflected the turn from long-term to short-term thinking that took place in culture at large in the 1980s. Few politicians planned beyond their next bid for election and companies rarely looked beyond the next quarterly cycle. The phenomenon even received a name – short-termism – the use of which skyrocketed in the late 1980s and 1990s. It has few defenders, but short-termism is now so deeply ingrained in our institutions that it has become a habit - something much complained about but not often diagnosed.

Yet there are signs that the long term and long range are returning. The scope of doctoral dissertations in history is widening. Professional historians are once again writing monographs covering periods of 200 to 2,000 years, or even longer. And there is an expanding universe of historical horizons, from the “deep history” of the human past, stretching over 40,000 years, to “big history” going back 13.8 billion years to the Big Bang.

One reason for this shift has been the rise, during the past decade, of big data applied to problems of vast scale, such as climate change, international governance and inequality. This has caused a return to questions about how the past changed over the centuries and millennia, and what that can tell us about our survival and flourishing in the future. It has also brought a new sense of responsibility and urgency to the work of scholars, as well as a new cross-disciplinary search for methods appropriate to looking at questions on long timescales.

Attempts to do this have driven academics in many disciplines, including economics, to grapple with data that are essentially historical - and, increasingly, to turn towards the methods of historians. For example, in the 1990s, in the course of studying the social effects of capitalism, American economists Paul Johnson and Stephen Nicholas used the height and weight of individuals when they were first admitted to prison as proxies for trends in working-class people’s nutrition and, by extension, well-being over the course of the 19th century. The evidence seemed to suggest that nutrition was improving: prisoners in 1867 were typically taller and heavier than prisoners in 1812. But a decade later, some British economists reconsidered the data. The data revealed, counter to the original thesis, that the weight of working-class women actually went down over the course of the Industrial Revolution. What we now understand, thanks to Cambridge economist Sara Horrell, is that the mothers and wives of working-class men had been starving themselves - skipping meals, passing on the biggest servings - to make sure that their mill-working or ship-loading husbands had enough energy to survive their industrial jobs. When first admitted to prison, most of the working-class women in English prisons were so thin and frail that they actually gained weight from the few cups of meagre gruel permitted to them by national authorities concerned to deter lazy paupers from seeking welfare at houses of correction.

In the field of big data, the sensitivity to agency, identity and personhood associated with the practice of history has much to contribute to epistemology and method in a variety of disciplines. The prison study reminds us, contra neoliberal histories of the Industrial Revolution, of the way that class and gender privilege annihilated the victories of entrepreneurial innovation in the experience of the majority. Without what Horrell calls “the wonderful usefulness of history”, the sensitivity to gender and age that she acquired from her reading of social historians, the evidence was seen merely to reinforce the prejudice of economics that Victorian industrialisation produced taller, better-fed proletariats.

Big data has frequently been used to suggest that we are locked into our history, our path dependent on larger structures that arrived before we got here. For example, a 2013 article in The Quarterly Journal of Economicsby three American economists, “On the origins of gender roles: women and the plough”, tells us that modern gender roles have structured our genes and our preferences since the development of agriculture; a 2010 paper in the American Economic Journal: Macroeconomics asks: “Was the wealth of nations determined in 1000 BC?”. Evolutionary biology has also seen an abundance of data being interpreted according to one or two hypotheses about human agency and utility, and our genes have been blamed for our systems of hierarchy and greed, for our gender roles and for the exploitation of the planet itself.

Yet gender roles and systems of hierarchy show enormous variation across human history: variation that is abstracted out of sight when we work with static social science models. When economists and political scientists talk about limits to growth and how we have passed the “carrying capacity” of our planet, historians recognise that they are rehearsing not a proven fact but a theological theory that dates from the Reverend Thomas Robert Malthus. Modern economists have removed the picture of an abusive God from their theories, but their theory of history is still, at root, an early 19th century one, where the universe is designed to punish the poor, and the experience of the rich is a sign of their obedience to natural laws. As University of Hertfordshire economist Geoffrey Hodgson has argued, “mainstream economics, by focusing on the concept of equilibrium, has neglected the problem of causality”. “Today,” he concludes, “researchers concerned wholly with data-gathering, or mathematical model building, often seem unable to appreciate the underlying problems.”

Historians understand that natural laws and patterns of behaviour do not bind individuals to any particular fate: that individual agency is one of many factors working to create the future. Good history identifies myths about the future and examines where evidence comes from. It looks to many different kinds and sources of data to gain multiple perspectives on how past and future were and may yet be experienced by a variety of different actors. Without this grasp of multiple causality, fundamentalism and dogmatism prevail. These claim that there can be only one future - the future of environmental collapse, or of rule by economists, robots or biological elites - because we are, in their diminished understanding of history, creatures predetermined by an ancient past. By raising the question of how we have learned to think differently from our ancestors, we rise above such misinformed use of data and theories that were collected by other generations for other purposes.

The arbitration of data is a task in which the history departments of major research universities will almost certainly take a lead because it requires talents and training that no other discipline possesses. Historians are trained to synthesise the various data even when they come from radically different sources and times. They are adept at noticing institutional bias, thinking about origin, comparing data of different kinds, resisting the powerful beckoning of received mythology and understanding that there are different kinds of causation. And ever since Max Weber’s work on the history of bureaucracy, historians have been among the most important critical examiners of the way “the official mind” collects and manages data from one generation to the next.

As well as writing critical long-term perspectives on the issues of climate change, governance and inequality mentioned above, historians are becoming the designers of tools for analysing data. For instance, one of us, JoGuldi, has co-designed a toolkit called Paper Machines for aggregating and analysing large numbers of documents. Developed with Brown University ethnomusicology postgraduate Christopher Johnson-Roberson, it allows scholars to track the rise and fall of textual themes over decades, allowing them to generalise about wide bodies of thought, such as things historians have said in a particular journal, or to compare thematic bodies of text, such as novels about 19th-century London versus novels about 19th-century Paris. This facilitates the construction of hypotheses about longue-durée patterns in the influence of ideas, individuals and professional cohorts.

The future training of researchers across a wide range of disciplines should be based around the interrogation of big data - such as that relating to era, gender, race and class - in search of turning points and insights into processes that take a long time to unfold. And the aptitude of historians for curating and critiquing statistics, expertise and professionalism among other communities makes them ideally suited to be the arbiters of this information. Armed with critical transnational and trans-temporal perspectives, they can be the public’s guardians against parochial, self-serving perspectives and endemic short-termism.