Students who achieve the same set of marks are being awarded widely divergent final degree scores, owing to the use of different algorithms by UK universities, an analysis reveals.

The use of varying degree algorithms – the set of rules used to translate module outcomes into a final degree classification – means that an institution could have double the proportion of first-class degree holders than another university with an identical set of student grades, according to a working paper.

The study by David Allen, associate head of department (programmes) at the University of the West of England’s Bristol Business School, draws on a recent report from Universities UK and GuildHE, which surveyed 120 UK institutions (113 of which hold degree-awarding powers) on their use of degree algorithms.

Mr Allen also collected data on the real module marks given to three cohorts of students in a medium-sized degree course at a large English university over the course of their three-year degrees.

In the paper, “Degree algorithms, grade inflation and equity: the UK higher education sector”, he combines the two datasets to investigate the impact that the choice of algorithm would have on the students’ final degree scores, and ultimately degree classifications.

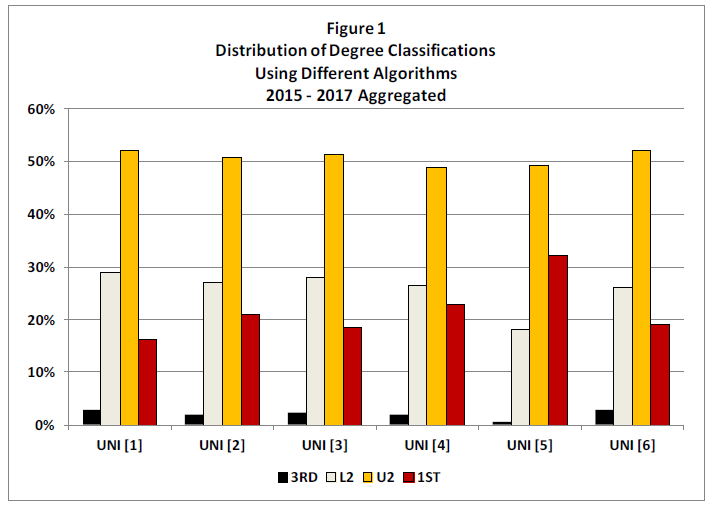

The results show that the proportion of students in the sample who would receive a first-class degree ranged from 16 to 32 per cent, depending on which of six degree algorithms was used (based on five existing algorithms and one grade point average calculation that counts marks from all years equally).

The proportion of students in the sample who received a first or 2:1 ranged from 68 to 82 per cent.

Distribution of degree classifications using different algorithms 2015-17 aggregated

A separate calculation found that one individual’s final mark ranged from a high 2:1 (66.7 per cent) to a low first (70.7 per cent) across nine different algorithms.

The variation comes from how the average mark of each year of study is weighted and, in particular, whether some module marks are discounted or removed from the calculation.

“The simulation carried out here shows that the varying use of differential weighting and the discounting of module marks creates artificial differences in the degree outcomes between universities,” the study says.

The results also appear to refute the claim made in the UUK/GuildHE study that the use of more than one algorithm across departments within one university “appears to have a limited impact on the overall profile of awards made”.

Differential weightings at UK universities (%)

| Year 1 | Year 2 | Year 3 | Number of universities | Example of university |

| 0 | 0 | 100 | 8 | |

| 0 | 20 | 80 | 6 | University of Derby |

| 0 | 25 | 75 | 18 | University of Birmingham; University of Hertfordshire; University of the West of England |

| 0 | 30 | 70 | 13 | |

| 0 | 33 | 67 | 19 | University of Manchester; University of Nottingham |

| 0 | 40 | 60 | 19 | University of Kent; University of East Anglia |

| 0 | 50 | 50 | 8 | Oxford Brookes University |

| 10 | 30 | 60 | 4 | |

| 11.1 | 33.3 | 55.6 | 2 | |

| 11.1 | 44.4 | 44.4 | 1 |

Source: UUK/GuildHE survey and David Allen

The findings will add weight to fears about grade inflation at UK institutions and heighten concerns about whether some universities are changing algorithms in order to improve degree outcomes.

A 2015 report from the Higher Education Academy found that almost half of UK universities changed how they calculated their degree classification to ensure that students did not get lower grades on average than those at rival institutions.

However, the UUK/GuildHE survey suggested that the motivations for changes were “more benign” than the HEA suggested, with respondents claiming that they were a means of “refreshing regulations in line with best practice, and to remove inappropriate barriers to student success”.

Mr Allen told Times Higher Education that he was concerned by the lack of equity across the university sector and he suggested that all universities adopt the same algorithm when classifying degree outcomes.

The use of different algorithms is “affecting the life chances of some of these students, which is the real big issue”, he said.

The findings could also impact student choice, if the varying use of algorithms at each university were made public, he said.

“If Oxford Brookes’ students [for example] realised that somehow or [other] they’re not benefiting from some of the rules offered in other universities, they’re going to be mightily pissed off,” he said.

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber? Login

![cardsInPlay = [];](https://d7.stg.timeshighereducation.com/sites/default/files/styles/the_breaking_news_image_style/public/sheep-with-lambs.jpg?itok=n2C8QshL)