Recently the media had fun comparing the vision of life in 2015 depicted in the 1989 film Back to the Future Part II with the reality – with the internet being the glaring omission. But what if we were to try to predict the academy’s future? Could we do a more accurate job? After all, isn’t that one of the tasks of university leaders, given that the future is coming even to those who don’t have a time machine in their sports cars?

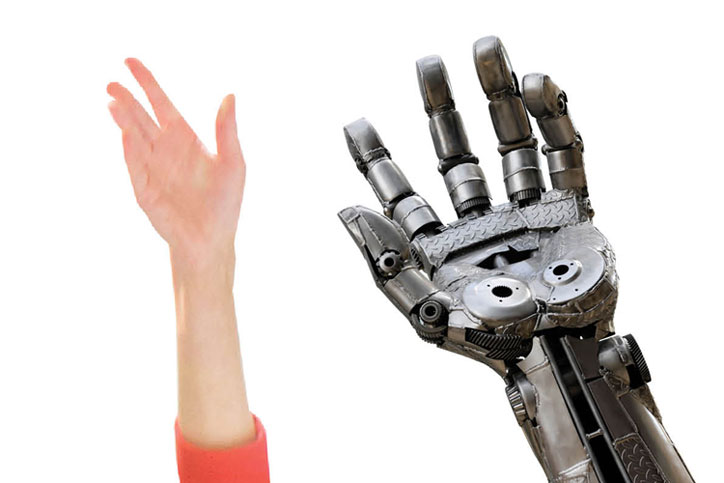

We asked several distinguished academics to tell us how they imagine higher education will look in 2030. The responses, however, could hardly be more disparate. While one contributor suggests that the rise of artificial intelligence will consign the university to history within 15 years, others believe that technology will continue to have minimal impact. A variety of shades of opinion in between are also set out.

No doubt all this goes to show that predicting the future – as any gambler knows – is a mug’s game. But our contributors’ attempts to do so raise a number of important issues that need to be addressed regardless, such as how universities should be assessed, what the right balance is between technology and human contact and whether job prospects in the academy are likely to get better or worse.

And there is also no denying the fun in crystal ball gazing – although readers may be disappointed to find no mention of students and academics rushing between lectures using that usual staple of futurology, the jetpack.

In 15 years, we will have no one to teach. The professional jobs for which we prepare students will be done by intelligent machines

The impact of robotics and artificial intelligence on every aspect of our lives is grossly underestimated. If we cautiously allow a doubling of technological impact every 18 months, 10 doublings in 15 years gives an increase of 1,000 times by 2030. Imagine your mobile device 1,000 times more effective.

Machine learning shatters the notion that computers can only do as they are told. There are increasing examples of machine creativity. An artificial intelligence recently “discovered” Newton’s second law, and derived the equations of motion of a double pendulum system by doing experiments for itself on a double pendulum. ROSS, a “super intelligent attorney” that scours the entire body of law, has trained IBM’s Watson cognitive computer to do paralegal work; Watson already handles simple cases by itself. Artificial intelligence is also able to make medical diagnoses, and there are robot surgeons. Financial systems run on algorithms. A University of Oxford report, The Future of Employment: How Susceptible are Jobs to Computerisation?, argues that nearly 50 per cent of US jobs are at risk from technological advancement – and this is almost certainly an underestimate. Optimists say that new jobs will appear, but they are unable to give a single concrete example. There will soon be no jobs needing proof of academic ability.

Looked at that way, it is clear that the university has no future. In 15 years, we will have no students to teach. Students want a good, professional job and degrees are evaluated against employability. But the professional jobs for which we currently prepare students will be done by intelligent machines. So why would students take on the debts involved in undertaking a degree course as it is conceived today?

The academic response to the technological deluge has been to shove some IT and a bit of programming into the syllabus. This is akin to applying a sticking plaster to a decapitated and dying body. Massive open online courses open the academic treasure trove to many people if supported by live online tutors, but this will not provide academics with a lifeline indefinitely. IBM’s Watson is being trained to answer call centre queries in natural language. It would also make an ideal tutor for Moocs: always available and always up to date.

Moreover, many academics, appointed for their research ability, are unable to inspire their students, and are blind to their personal and emotional needs. The Massachusetts Institute of Technology, meanwhile, has established an “Affective Computing Group” within its Media Lab. Heather Knight, a PhD student at Carnegie Mellon University in Pittsburgh, attended a drama course and is now training her robots to express emotion and to “understand” humour. The first two production runs of a Japanese companion robot, called Pepper, sold out in less than a minute. Robots are learning to simulate kindness and caring better than most humans.

But while universities and academics will be consigned to history, learning may yet survive. Assuming we survive the transition to global unemployment, many will wallow in the hedonism and feelings of the Brave New Technological World. But if I am still alive by 2030, I hope to have a wise and erudite AI tutor and mentor. It could be humanoid or simply an app on a device I carry. Always knowing my state from the sensors I wear, it will know when and how best to take me through the ideas I have always wanted to enjoy, using Platonic dialogues to ensure I explore and prove my understanding.

I hope my AI tutor will link me up with other people who also enjoy bright ideas and challenges to the mind, allowing us to associate in a form of university freed from the burdens of the factory processes that now demean so much of what academics do. But this happy ending will come to pass only if those in power see the technological juggernaut for what it is and begin to work out what needs to be done before we all become roadkill on the information superhighway.

Eric Cooke is a retired senior tutor from the department of electronics and computer science at the University of Southampton.

The pedagogic pendulum will swing back towards the lecture as the importance of an analytical mind becomes appreciated once more

At present, universities are in a race to “flip the classroom”. In the name of superseding tedious, droning lecturers and their passive, sometimes slumbering – or even absent – student audiences, we are embracing electronically enhanced “active learning”. Now students can stay at home, absorb lecture content online and then come to campus merely for tutorials to discuss what they didn’t understand from their laptop.

The printed textbook market is in worldwide decline, as students increasingly rely on online search engines, online lecture notes and recorded lectures for their information. Digital disruption is everywhere, as more and more universities establish massive open online courses, offering their best professors to a universal public through global online platforms, entirely free of charge.

But is this a revolution with long-term, transformational consequences? Or is it simply an extreme phase in the cycle of pedagogic fashion, from which the pendulum will have swung back towards more traditional campus norms by 2030?

Lost in the clamour for active learning and digital enhancement has been any defence of the unique, vital skills the traditional lecture develops. Tangibly, our tweeting, blogging, app-loving students are losing the capacity to listen at length, absorb a complex argument and summarise, dissect and evaluate what they hear as they hear it.

No doubt we are producing more alertness in students than we often had in the traditional lecture theatre: we are starting to compete better for their attention amid the distracting din of digital communication and entertainment that surrounds them. But how many will graduate with the capacity to comprehend a line of reasoning, to focus on its meaning, master its contentions and respond with a critical perspective? An analytical mind is a fundamental graduate attribute in any field.

Too many of our students now graduate as competent surfers of a digital wave of bite-sized communications, saturated in a sea of information but unable to navigate the wider ocean in search of deep understanding. And, for this reason, my prediction for the coming 15 years is that the pedagogic pendulum will indeed swing back towards the lecture.

I don’t mean to suggest that universities will abandon e-learning and online enhancements, for these continue to change every part of our lives. Rather, the lecture will be re-energised, as the importance for students of a clear, focused and analytical mind becomes appreciated once more, and the need to control unfettered access to digital devices in education is understood.

Universities will ban laptops and smartphones from their classes, to regain the lecture or seminar room as a place where multitasking is suspended in favour of sustained attention to a single topic. Universities will need to overtly teach note-taking to every student, to revive the dying manual art of precis, distillation and organisation that is so critical to giving meaning to a lecture. And, by 2030, universities will have adopted a code that requires every recorded lecture, online course vehicle or Mooc to be balanced with face-to-face, academic-led dialogue, in which a student’s ability to reason and argue are methodically polished.

Warren Bebbington is vice-chancellor of the University of Adelaide, Australia. In 2013, he announced that Adelaide would offer considerably fewer live lectures and considerably more small-group teaching.

Exams that emphasise mastery of taught knowledge will no longer be the primary tool for judging student performance

In many Jane Austen novels, the plot involves landing the best bachelor, at which point the story ends. We find a similar narrative in secondary schools across the US. This plot involves getting into the best college. For students and parents, landing a college place has become the defining symbol of a successful childhood, and their lives are organised towards hooking the prize catch.

So bricks and mortar universities will not disappear any time soon. But while it might be where Austen leaves off, acceptance by the object of their desire is only the beginning of our happy young protagonists’ life stories. Indeed, students at least need to finish their college years before they even get their bachelor – of arts or science. And that is where a number of enhancements are likely to be introduced by 2030.

First, educators will have figured out how to teach really hard concepts – imaginary numbers, quantum physics, a satisfying interpretation of T. S. Eliot’s The Love Song of J. Alfred Prufrock. Science will have made substantial progress in understanding how people learn and how to produce conditions that optimise learning. New technologies that deliver instruction will also collect precise data on what’s helping students the most and what is not working. A virtuous cycle of rapid feedback and revision to pedagogical innovations will permit the continuous improvement of both instruction and the scientific theories behind it.

Second, exams that emphasise mastery of taught knowledge will no longer be the primary tool for judging student performance. Instead, assessments will evaluate how well students are prepared for future learning – which is the point of university anyway. Students will be presented with new content – material they haven’t been taught in class – and evaluated by how well they learn from that content. In a world where jobs and knowledge change rapidly, assessments should measure students’ will and ability to continue learning.

Third, universities’ departmental fiefdoms will be broken up to support the interdisciplinary efforts needed to create innovative solutions to major societal problems, such as reducing reliance on non-renewable resources. Meeting great challenges depends on expertise from all the sciences and humanities, and bureaucratic and cultural barriers to problem-focused research must and will be removed.

This de-Balkanisation of university departments will also result in Health 101 becoming the most popular course. Advances in biology, medicine, psychology and nutrition will combine to offer strong prescriptions for the care of oneself and one’s children that everyone will need to know about; students will learn a range of basic disciplinary theories in an applied context, so that they can see the personal relevance.

New approaches to research, teaching and learning will require collaborative, creative students who know what it means to learn well. To ensure that they have such applicants, universities will need to fulfil their responsibility to pre-collegiate education. This includes pioneering ways to ensure that all children have an opportunity to learn well at the schools that will prepare them for a different – but still happy – ending to their childhoods. College admission will no longer serve as the dreamy end point, but as just one chapter in a long life of learning.

Dan Schwartz is dean and Candace Thille is assistant professor at Stanford University’s Graduate School of Education.

Technology has found a place in universities, but nothing significant has changed

Times Higher Education has invited writers to imagine what higher education will look like in 2030. But how will our prophecies look to future generations? To get some idea, let’s look back and see how yesterday’s pundits imagined things would look today.

In 1913, Thomas Edison predicted that “books will soon be obsolete” because educators would “teach every branch of human knowledge with the motion picture”. As we know, books survived (even if they are now migrating on to digital platforms), and motion pictures have had almost no influence on education. Still, Edison’s failure did not dampen the optimism of technology gurus. Over the past century, every new technology supposedly heralded a revolution in higher education.

In the 1930s, it was radio; in the 1960s, it was television. The world’s leading experts would be beamed into lecture rooms, reducing the need for skilled lecturers on every campus. A few decades later, it was videodisks (remember them?). In 1998, The Age, the Melbourne-based newspaper, claimed that “teaching will [soon] take place...using the latest in advanced technology...the mobile phone”.

Students and lecturers communicating by voicemail seems quaint today, but this is a common reaction when we look back at past predictions: they are almost always wrong. Nevertheless, a potent combination of enthusiasm, tunnel vision and cockeyed optimism keeps them coming.

For more than 30 years, Silicon Valley seers have claimed that personal computers, laptops, tablets, internet-connected whiteboards, computerised marking, massive open online courses, computer games and social networks would all transform higher education. Learning would become automated: cheaper and speedier. All of these technologies have found a place in universities, but nothing significant has changed. Lectures remain ubiquitous; human beings still mark most examinations and costs keep rising.

Technology champions blame their failed forecasts on the hidebound conservatism of universities. This facile explanation misses a vital point. All universities use modern technologies to transmit information, provide practice (as in language learning or mathematics) and to communicate across distances. But higher education is not just a matter of information and drill; it is also about wisdom.

In Choruses from The Rock, T. S. Eliot asks: “Where is the wisdom we have lost in knowledge? Where is the knowledge we have lost in information?” Universities understand the differences. Information, knowledge and drill are necessary, but they are not sufficient. To be wise, students must connect what they are learning to knowledge in other areas, to the great work of the past and to their personal experience. They need to be inspired to delve beneath the surface to the meaning of the material they are studying. Universities are not just purveyors of knowledge and skills, they are social institutions designed explicitly to help make students wise. And wisdom does not come only from lectures; students also acquire it from one another. In our diverse institutions, they learn tolerance, acceptance and fair play.

I have no crystal ball, but I am willing to stick my neck out and make a prediction. Universities of the future will be much like those of today.

Steven Schwartz is the former vice-chancellor of Macquarie University and Murdoch University in Australia, and of Brunel University London.

Devices will replace academic faculty by 2030. The concept of individual campuses will slowly disappear. The two-semester pattern will be replaced by year-round learning

For the first 300 years after Harvard University was founded in 1636, American higher education consisted of young upper-class white men sitting in classrooms listening to lectures by older upper-class white men. Then, in the 1950s, a wave of change began that shows every sign of becoming a tsunami by the year 2030.

The first major change was the gender and racial composition of the campus, beginning with the introduction of women, followed by people of colour, into the student body, faculty and administration. Universal access for anyone who wishes to study or work in higher education will be significantly achieved by 2030.

Then came the rise of digital technology. The ubiquity of Google and Wikipedia means the days of rote learning are gone. From the collection of big data (used in administration and research) to the development of massive open online courses, the once intimate, hands-on college environment is morphing into a more impersonal, automated world in which students no longer absorb a faculty-designed curriculum but instead develop a high degree of academic self-direction.

Devices will replace faculty by 2030. There will be reliable e-learning options from numerous providers on multiple platforms, and students will select the ones most compatible with their preferred learning style. Earning “a degree” will lose importance as the range of credentials widens. Certificates from schools, workplaces and industry, alongside something akin to the merit badges earned by Scouts, will gain in respectability – especially once a new system of accreditation for them is developed.

Professors will typically appear remotely from some type of broadcast centre, and the concept of individual campuses will slowly disappear as more and more students pursue their studies from home, workplaces, park benches or coffee shops. Place-based education will not disappear entirely; as well as being places of learning, campuses are a force for socialisation, where children mature into adults through interaction with others before they embark on careers. But the traditional, highly inefficient two-semester pattern will certainly disappear, replaced by year-round learning.

The adoption of such technological innovations will be incentivised by the urgent need to make education more affordable. Salaries and benefits are the single biggest cost in all university budgets, but since considerably fewer academics will be required in the technological future, the inflationary pressure this imposes on tuition fees will be eased.

Academics will still be needed to conduct research, much of which – at least in the sciences and social sciences – will be externally funded. But where it will take place is not clear. Perhaps “community” labs will appear in tech zones, where academics can rent facilities like digital start-ups. And perhaps academics will compete on price in a much more aggressive way than they currently do, hungry for the credit, licences and patents that will presumably accrue to them alone as institutional affiliations die out. As Amazon founder Jeff Bezos says: “Your margin is my opportunity.”

Stephen Joel Trachtenberg is university professor of public service and president emeritus of George Washington University in Washington DC.

We will see a form of higher education that truly values a broader range of characteristics than those linked to subject knowledge or employability skills

There will be no higher education revolution over the next 15 years. Rather, we will see evolutionary development in several areas.

The first is provider types. Market forces demand that higher education providers clearly define their distinctive contribution to the catalogue of “choice” available to students, and I believe that we will see significantly more differentiation by 2030. Providers will be either very local, plugged into a powerful societal and economic network of regionally defined business, industry and cultural hubs, or they will be international brands, recognised as the “go to” organisations for the creation and dissemination of knowledge and for seeking solutions to global problems.

We will also see more specialised providers. In the UK, such providers are currently limited to certain discipline areas, such as the arts, law and business, but they are ripe for significant expansion into areas such as science, engineering and technology, perhaps sponsored by huge corporates. Will there, for instance, be “The Google University” by 2030?

Meanwhile, a renewed emphasis on partnerships, collaboration and networks will see university federations operating more formally across the globe. These, for instance, will bring together provider types with shared specialisms, or shared defining characteristics such as religious foundation.

These changes will also affect programmes of study. Some institutions will offer the opportunity to create a portfolio degree – “pick and mix” modules bolted together, delivered by multiple providers and probably enabling an accelerated route through degree-level study (of course, this is already happening in some quarters). I also think we will see a form of higher education that truly values a broader range of characteristics than those linked to subject knowledge or employability skills. Attributes such as wisdom, tolerance, emotional intelligence, ethical understanding and cultural literacy will be seen as even more vital in preparing for true global interaction and personal and corporate impact.

Another question is where higher education will be delivered in 2030. In this millennium we have seen huge advances in technology and social media that have revolutionised how we access and handle information and how we communicate. Education delivery is already flexible, portable and not tied to place, and this development will continue. However, technology will not dissolve the need for universities to exist in physical form. I am convinced that there will always be significant numbers of students who want to “go” to university, to be part of a community of learners, educators and scholars exploring, disassembling and co-creating knowledge.

This leads me to my fourth point. I hope that the developments described above will spark a renewed debate about the purpose of higher education and, crucially, the role of universities within it. Remembering that the term “university” is derived from the Latin universitas magistrorum et scholarium (roughly translated: “a community of teachers and scholars”), I believe that by 2030, higher education provision, in the UK at least, will be more clearly defined according to purpose and the nature of provision, such that only those providers that fully demonstrate the engagement of a community of teachers and scholars will be called universities. Other providers will be celebrated for being different and for offering choice.

In this way, we will see a global higher education landscape evolving that benefits in equal measure individuals, communities and the wider world in which we live.

Claire Taylor is pro vice-chancellor for academic strategy at St Mary’s University, Twickenham.

The real game changer will be viable measures of comparative student learning outcomes. These will lift teaching to a status closer to that enjoyed by research

Some changes over the next 15 years will be incremental and others transformative. One incremental change is that participation in higher education will continue to grow everywhere, despite the flattening of average graduate starting salaries; those who leave education at 16 or 17 will find it ever harder to embark on a career. Meanwhile, sharp-eyed university leaders and marketing departments will entice students with new work experience-based offerings and German-style technical and vocational programmes.

But the real game changer will not be vocational education. Still less will it be the wholesale adoption of massive open online courses in place of pedagogies. Neither the lecture theatre nor the campus will fade into history. There will be one big transformation: viable measures of comparative student learning outcomes, including value added between enrolment and graduation. These measures will be as revolutionary in their effects as global research rankings have been. They will quickly overshadow the subjective consumerist metrics derived from student satisfaction and student engagement surveys. They will enable national and international comparisons of student achievement. They will also pull attention away from crude instrumental measures of outcomes and back towards the core processes of knowledge and intellectual formation.

The most likely pathway to solid, educationally sound data on learning is the Tuning Academy’s current project on “Measuring and Comparing Achievements of Learning Outcomes in Higher Education in Europe”, known as Calohee. This measures outcomes in individual universities in five families of disciplines: engineering (beginning with civil engineering), healthcare (nursing), humanities (history), natural sciences (physics) and social sciences (education). Tuning has proceeded slowly and methodically, nesting its approach to cognitive formation in specific bodies of knowledge rather than opting for US-style generic competency tests. It has now drawn in more than 100 higher education sectors in Europe and Latin America and this provides a much stronger base for adoption than the Organisation for Economic Cooperation and Development’s unsuccessful Assessment of Higher Education Learning Outcomes (Ahelo) project.

Credible comparative measures of learning outcomes will finally, after all the talk, lift teaching to a status closer to that enjoyed by research. Of course, this will also provide a wider range of universities – and countries –with a chance to shine. Over time, most of the leading universities will do well in the learning measures: they have the resources and the smarts to meet the challenge. But new players will emerge, and students will have a stronger set of data for making choices. And instead of trends towards grade inflation and lighter workloads to prop up satisfaction indicators, we will all have incentives to continually improve actual cognitive development.

Simon Marginson is professor of international higher education at the UCL Institute of Education, and director of the Economic and Social Research Council/Higher Education Funding Council for England Centre for Global Higher Education.

POSTSCRIPT:

Print headline: Future perfect?

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber? Login